6. Maths4ML: Determinants & Eigenvectors

Matrices are opaque

As established in last blog on matrices, they are machines which transforms a vector inputed to them. But any random matrix doesn't reveal what it is doing to data. To find out the DNA of the matrix, these 2 tools are needed :

- Determinant : tells how much space is expanding or shrinking.

- Eigen Vectors : tells along which line the stretching is happening.

Determinant (Scaling Factor)

To get the geometric intuition, let a unit square be sitting at the origin . Area of the square is 1. When a matrix (linear transformation) is applied to this space, the grid line warp. The square will stretch into a long rectangle, skew into a diamond or even shrink into dot.

Determinant is the new area of the square.

- Det = 2 : Space is stretching. Area of square doubles.

- Det = 0.5 : Space is contracting. Area is halved.

- Det = 1 : Shape might change. Area remains the same.

Singular Matrix

A matrix whose determinant = 0. This means the area of square becomes 0. This means the square is squashed completely flat into a single line or even a point.

This matrix which collapses the space is called Singular Matrix.

This matrix destroys information. When the square is squashed into a line, all information about how the original square looked like is lost. This process is irreversible.

Thus, singular matrix (matrix with det = 0) is non-invertible.

For a $2 \times 2$ matrix :

The determinant is :

The determinant is the factor by which the linear transformation scales area.

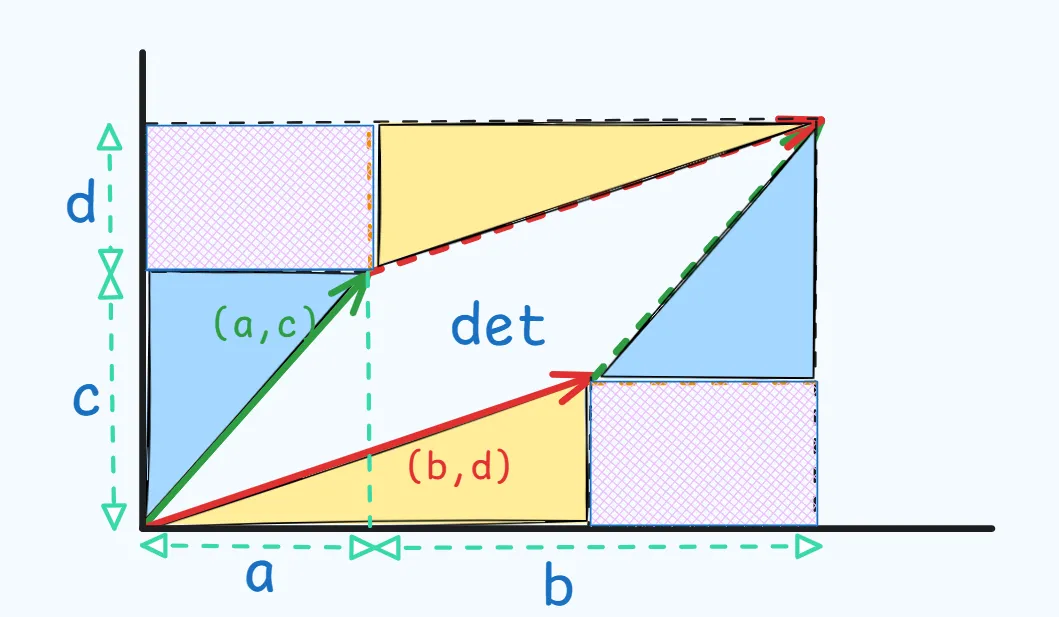

Derivation of Determinant

This transforms the basis vectors into 2 new vectors :

- Vector 1 :

- Moves steps right, steps up

- Vector 2 :

- Moves steps right, steps up

These 2 vectors will form a parallelogram. Det is the area of this parallelogram.

To find the area of the rectangle, subtract the area of the big box and the empty spaces surrounding the parallelogram.

- As can be observed from the figure, area of big box = .

- The waste area consist of 2 of each of the blue & yellow triangle and the 2 pink rectangles.

- Their combined area =

Thus,

Why determinant is the scaling factor?

The term represents scaling along the main axes based on the original basis vectors.

The term represents the twist or shears that interfere with the area.

So subtraction of the twist from stretch gives the true scaling factor.

Negative Determinant

It signifies that the universe has been flipped over. This means that the vectors are arranged in a clockwise manner.

- is the standard orientation (counter-clockwise) ans hence its determinant is positive.

- is the swapped orientation (clockwise) ans hence its determinant is negative.

Thus, negative determinant just shows that the orientation has flipped.

Deteminant Drag

Drag the red and green basis vectors to change the matrix value. These vectors represent the columns of the transformation matrix.

- Blue Square : Positive determinant (normal orientation)

- Purple Square : Negative determinant (flipped orientation)

- Collapsed Line : When determinant ≈ 0, the square flattens completely

There are also pre-existing presets for the transformation matrix.

Eigen Vectors (Stubborn Vectors)

Let there is a paper or a grid with some vectors (arrows) on it, and the paper is stretched from left and right side horizontally.

- A vector pointing $45\degree$ will tilt and will be pulled horizontally.

- The vector which was pointing perfrectly horizontally (eg. ) will not tilt. It will just get longer.

- The vector which was pointing perfrectly vertically (eg. ) will also not tilt.

Eignen vectors are these stubborn arrows. These vectors stay on the same path even when other vectors get knocked off their path when a matrix transformation is applied. They don't change direction but only get scaled.

- Eigenvector () : The vector that refuses to rotate.

- Eigenvalue () : The number describing how much the vector is stretched.

Eigenvectors is like the skeleton of the matrix. It reveals the principle axis along which the transformation is acting.

- : Take the vector and transform it with matrix .

- : Take the original vector and scale it by .

- The transformation didn't change the direction. It acted exactly like scalar multiplication.

Characteristic Equation

To find the eigen vectors, rearrange the above equation :

Factoring out :

If the matrix () is ivertible, then the only solution will be . For a non-zero vector that solves this equation, the matrix () will have to squash the vector-space (send the non-zero vector to zero). This means the determinant of the matrix must be 0.

Solved Example of finding eigen vectors and eigen values

Solving the Characteristic equation using the above matrix : .

So the 2 eigenvalues are : and .

Solving the linear system for the vector will give the eigen vectors.

: It will give . So any vector where equals is an eigenvector.

- Eigenvector 1 :

: It will give .

- Eigenvector 2 :

Relationship between trace & eigenvalues and determinant & eigenvalues

- Trace : sum of the top-left to bottom-right diagonal.

EigenVector Hunt

- Red Arrow : input vecetor

- Yellow Arrow : transformed vecetor

- Green Arrow : When the input and transformed vector align, that is the eigen vector.

There are 3 presets for the transformation matrix.

Drag the input vector to find the eigenvector.

Rotation Matrix

This matrix rotates the grid $90\degree$ clock-wise

Hence, there are no real eigenvalues and hence no real eigenvectors.

Thus, it is not possible to find the eigenvector in Pure Rotation preset above.

Eigen Decomposition (Matrix Factorization)

Matrix 's eigen decomposition is :

- : Eigenvector Matrix

- Take all the eigenvectors () and put them together as the columns of a single matrix

- : Eigenvalue Matrix

- This is a Diagonal Matrix.

- All the off-diagonal values are 0.

- Put all the corresponding eigenvalues () on the main diagonal.

- This is a Diagonal Matrix.

- : Inverse

- Inverse of the eigenvector matrix

Intuition of Eigen Decomposition

A matrix when applied to a vector may result in the the x-coordinate git mixed into the y-coordinate. Everything is coupled together. Eigenvectors are the Axes of Rotation. If we look at the world from the perspective of the eigenvectors, there is no mixing. There is only stretching.

Eigen Decomposition breaks the transformation (matrix) into 3 distinct moves :

(The Twist) :

- Change the viewpoint. Rotate the entire coordinate system so that the eigenvectors become the new x and y axes.

(The Stretch) :

- Now that we are aligned with eigenvectors, the transformation is just scaling along the axes. There is no more rotation or shearing.

(The Untwist) :

- We rotate the coordinate system back to the original standard orientation.

A dense matrix is like a diagonal matrix (which is computationally easier to use) that is "wearing a costume." The matrices and are just the process of taking the costume off and putting it back on.

Thus, it is also called Diagonalization, because the matrix is replaced with a diagonal matrix .

Why perform diagonalization ?

As established, .

Similarly,

- is a lot easy to caluculate as it is a diagonal matrix. So, just take the power of the diagonal elements.

Drawback

Standard Eigenvectors and Eigenvalues are strictly for square matrices.

. The Output Vector must be parallel to the Input Vector.

- For a Square matrix ($2\times2$) :

- Takes a 2D vector and outputs a 2D vector.

- For a Rectangular matrix ($3\times2$) :

- Takes a 2D vector and outputs a 3D vector.

- It changes the dimension, but the result should have been same as a scalar multiplication and that means dimensions must not change.

And as in most real world problems, data is not a square (eg. 1000 rows (users) & 50 columns (features)).

For this reason SVD is used for any shape.

Coordinate Changer

This simulation visualizes the equation :

- : transformation matrix in the standard (x, y) coordinates.

- It is often coupled, i.e., x affects y, y affects x causing shearing and rotation.

- : diagonal matrix containing the Eigenvalues ().

- It represents pure stretching/shrinking with no rotation.

The eigen vectors are defined as :

These 2 vectors are dynamically calculated using the slider Eigenvector Angle.

- These 2 vectors are always perpendicular, thus forming a pure rotation matrix .

The matrix is not fixed in this simulation. For any point , the transformation is calculated using .

- (Basis Change) : The matrix formed by the eigenvectors columns:

- (Diagonal Scaling) : The matrix containing eigenvalues:

Thus to calculate , (On Left Side) the sim computes :

- : Takes the cross formed by the eigenvectors and rotate it to match the x,y axis shape.

- : Stretches by and by .

- : Rotates the world back to original angle.

On Right Side :

- Let the Horizontal Axis be Eigenvector 1 ().

- Let the Vertical Axis be Eigenvector 2 ().

So, because of the way the camera view is defined, the grid lines are the eigenvectors.

All the points on the smiley face undergo the respective transformation :

- Left : Calculates by doing the three-step dance ().

- Right : The transformation is by only Diagonal matrix , because the camera is already "rotated" to align with the eigenvectors, we skip the rotation steps ( and ) entirely.

- So the transformation becomes just the scaling :

v.x * lambda1andv.y * lambda2.

- So the transformation becomes just the scaling :

With this, the post on determinants and eigenvectors, their geometric implications and how diagonalization works and simplifies the task is complete.