5. Maths4ML: Matrices

Matrices are Space Warpers

A matrix is not just a spreadsheet or a container for data. It is a function or a machine. Ab equation like (in a Neural Network or linear regression, etc.), the matrix is a function or an agent that grabs the data vector () and physically moves it, warps it and transforms it into a new position ().

Matrices can stretch space and collapse dimensions.

Matrix transforming the Basis Vectors

Let the basis vectors be denoted by :

- : - A Green arrow pointing right.

- : - A Red arrow pointing up.

The columns of a matrix tells exactly where these 2 arrows will land after transformation.

Column 1 : The basis vector now lives here from .

Column 2 : The basis vector now lives here from .

Every other point on the grid will follow the new grid lines (basis) formed by these 2 arrows.

So, for example a point according to the old system would have meant to go 2 units of and 1 unit of , but now since these basis vectors point in different direction, the same vector will point in a completely different direction.

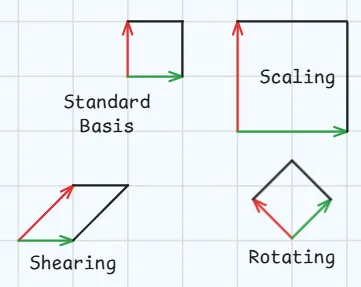

Fundamental Matrices

1. Scaling

Doubling the length of the green & red arrow will cause the matrix to zoom in on the data. For example :

2. Rotation

Arrows stay the same in length but pivot $90\degree$, the matrix spins the entire world. The grid will remain square but tilted :

3. Shearing

If the bottom of a square if fixed and the top is pushed sideways. The square will turn into a parallelogram.

Below image shows the exact matrices transformations as covered above.

Matrix Vector Multiplication

This is Row-by-Column multiplication. It is computationally correct but it doesn't offer any intuition. This same thing can be represented as :

This is literally saying :

Take steps along the transformed Green Arrow (Column 1) and then take steps along the transformed Red Arrow (Column 2).

Matrix-Matrix Multiplication

A matrix-matrix multiplication like using standard row-by-column method is a mess of numbers.

A better way is to look at matrix as a collection of columns (vectors).

So now instead of one big operation it is just doing matrix-vector multiplication twice, once for each of the column of .

- Column 1 of result : acts on the first column of .

- Column 2 of result : acts on the second column of .

- Column 1 of matrix is the result of passing column 1 of through machine (matrix) :

- Column 2 of matrix is the result of passing column 2 of through machine (matrix) :

So, finally Matrix is just these 2 results pasted side-by-side :

Thus,

The output columns of are literally just weighted sums of the columns of . The resulting shape must live inside the space defined by 's columns.

Matrix Multiplication is Function Composition

Matrix multiplication is simply chaining multiple machines.

The vector is the raw material.

- Machine is the first machine which transforms it.

- Machine is the second machine which grabs the result of Machine and transforms it again.

Why MatMul is not commutative

Suppose 2 matrices & which stretch the -axis by 2 and rotate everything by $90\degree$ respectively.

Scenario 1 : Stretch then rotate

- Stretches left-right.

- Rotates so that left becomes bottom & right becomes top.

Scenario 2 : Rotate then stretch

- Rotates so that left becomes bottom & right becomes top.

- Stretches the original top-bottom (which are now left-right).

Thus, even though the same operations are applied, the order changes everything. Thus, .

Invertible Matrices

Chaining matrices to get back to from where we started.

- Matrix is a Shear Right matrix.

- Matrix is a Shear Left matrix.

Therefore, will slant a square right and then push it back to the original shape.

Thus,

is the identity matrix that does nothing.

Transpose

Mechanical definition is to swap the rows & columns.

Co-variance

is the similarity map of data . Let there be a dataset of 3 students consisting of their study time & score.

- Column 1 : Blue vector is the study vector.

- Column 2 : Red vector is the score vector.

Now Row 1 is the study vector & Row 2 is the score vector.

Thus, will become :

- Cell (1,1) : Variance of the Study vector.

- Cell (2,2) : Variance of the Score vector.

- Cell (1,2) & (2,1) : Covariance of the Score & Study vectors.

- Diagonals: How spread out is this feature? (Variance)

- Off-Diagonals: How much does Feature A look like Feature B? (Covariance/Similarity)

Symmetric Matrix

Matrix is equal to its own transpose.

Let :

This is an Asymmetric Matrix. If the input is a circle, this matrix will grab the top & slide it sideways. The result will be a oval but it will be smeared.

This is a symmetric matrix. It will also stretch a circle but the resultant will be an ellipse with its major & minor axis perpendicular to each other.

- Asymmetric Matrix : Might shear space, twist it, and squash it at weird angles.

- Symmetric Matrix : It creates a shape where the axes of stretching are perpendicular.

Trace

The trace () of the matrix is sum of its diagonal elements.

In a matrix :

- Off diagonal elements :

- : tells how much points Up into the y-axis.

- : tells how much points Right into the x-axis.

They describe how much becomes and becomes .

- Diagonal elements :

- : tells how much stretches while staying along the x-axis.

- : tells how much stretches while staying along the y-axis.

They describe the direct stretching.

So tells how much the matrix pushing outward along the original grid lines.

The Trace ignores the mixing. It only asks: "On average, is the machine stretching things out or shrinking them in?"

- Trace > 0 : Matrix is generally expanding the space.

- Trace < 0 : Matrix is generally collapsing the space.

- Trace = 0 : The expansion in one direction is perfectly cancelled by contraction in the other.

Range (Column Space)

Range of a matrix is the Span of its columns.

- Span of a set of vectors is the set of all the vectors that can be formed by scaling & adding those vectors.

Thus, Column Space (Range) is the set of vectors that can be get by taking all possible linear combinations of its column vectors.

Null Space

The null space (or kernel) of a matrix is the set of all vectors that satisfy the equation (the zero vector).

Rank

It is a single number which measures the dimension of the space. It tells the number of actual, non-redundant columns in a matrix.

- Column 3 = Column 1 + Column 2.

- Thus, there are only 2 dimensions as the third column is just a diagonal lying in the plane defined by the first 2 columns.

- Thus, Rank = 2.

The concept of dimensionality reduction is based on this fact to throw away the Fake dimensions and keep only the Rank dimensions (the true signals).

Thus,

| Concept | Definition | Intuition |

|---|---|---|

| Columns | The vectors that make up the matrix . | The Raw tools, Arrows, some of which may be redundant |

| Span | The set of all possible linear combinations of a list of vectors: . | The Cloud. The total shape created by stretching and combining the raw tools in every possible way |

| Range (Column Space) | The subspace of outputs reachable by the linear transformation . Mathematically equivalent to the Span of the columns. | The Reach. When we view the matrix as a machine, the Range is the specific "territory" the machine can touch. |

| Basis | A minimal set of linearly independent vectors that spans a subspace. | The Skeleton. If you strip away all the redundant columns (the fake tools), this is the clean, efficient set of arrows left over that still builds the same Cloud. |

| Rank | The dimension of the Column Space. | The Score. A single number representing the "True Dimension" of the output. It tells how many useful dimensions exist in your data. |

The Columns of the matrix generate a Span. When viewed as a function, this Span is called the Range. The smallest set of vectors needed to describe this Range is the Basis, and the count of vectors in that Basis is the Rank.

Space Warper

Modify the transformation matrix by dragging the basis vectors ( and ) or by changing the sliders values representing :

- Vector represents .

- Vector represents .

When the green arrow aligns with red arrow, it signifies a dimension loss.

With this this post on matrices and their geometric implementation, types of matrices and different operations using matrices is completed.