Dataset Representation

Tabular datasets consists of rows and columns :

Rows : Also called data points, samples, observations, instances, patterns.

- Each row reprsents a single observation.

Columns : Also called variables, characteristics, features, attribute.

- Each columns represents a measurable property or attribute of that observation.

| H | W | |

|---|

| p1 | 130 | 55 | |

| p2 | 140 | 65 | |

| ⋮ | ⋮ | ⋮ | |

| pn | 160 | 75 | |

To perform statistical analysis, the datasets is viewed as samples drawn from a probability distribution.

Each feature (column) is treated as Random Variable (X).

- A RV is a function that maps outcomes of a random phenomenon to numerical values.

- Thus, Xheight is a rv describing the distribution of heights feature in the population.

A single row containing d features is a random vector.

- If the dataset has features X1, X2, …, Xd, then a single observation is a vector x=[x1,x2,…,xd]T.

Thus, the entire dataset is a collection of n observed random vectors :

D={x1,x2,…,xn}=Dataset

xi=[xi1,xi2,…,xid]T random vectorThus, the full table can be visualized like :

X=x1Tx2T⋮xnT=x11x21⋮xn1x12x22⋮xn2⋯⋯⋱⋯x1dx2d⋮xnd

Measures of Central Tendencies

Moment 1

1. Mean

μ=n1i=1∑nxi=xˉX=x11x21⋮xˉ1xn1x12x22⋮xˉ2xn2x13x23⋮xˉ3xn3……⋱…x1dx2d⋮xˉdxndWhere :

And thus the resulting mean vector μ is a collection of these individual feature means :

μ=Xˉ=[xˉ1xˉ2xˉ3…xˉd]TFirst, sort the data in ascending order.

If odd no. of values :

Median=x2n+1If even no. of values :

Median=21[x2n+x2n+1]Red: When outliers are present in the dataset, it is better to use median.

Moment 2 (Measures of Dispersion)

3. Variance

Measures how far are data points spread out from the mean.

σ2=n−11i=1∑n(xi−μ)2- It heavily weight the outliers because it squares the difference.

4. Standard Deviation

σ=n−11i=1∑n(xi−μ)2- Square root of variance.

- It measures the average distance of data points from the mean.

5. Range

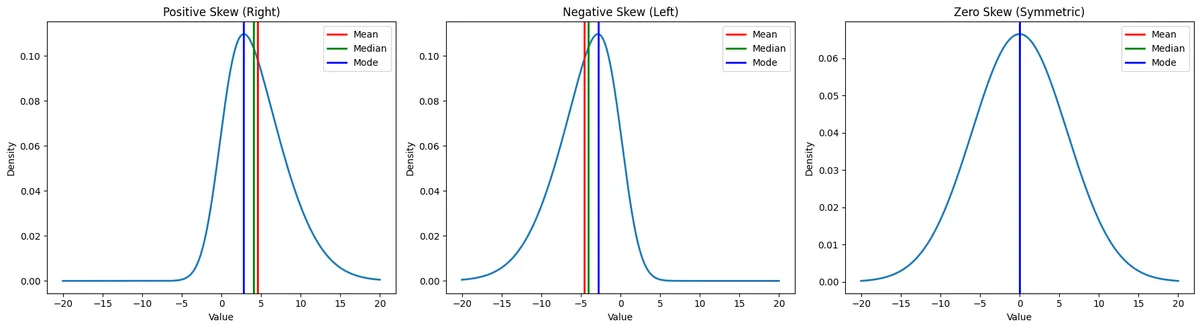

Range=xmax−xminMoment 3 / Skewness

Measures the asymmetry / symmetry of the distribution around the mean.

Skewness=n1∑(σxi−μ)3- Positive Skew : Tail extends to the right (right skewed).

- Negative Skew : Tail extends to the left (left skewed).

- Zero Skew : Perfectly symmetrical (like a standard Normal distribution).

Moment 4 / Kurtosis

It defines the shape in terms of peak (sharpness) and tail (heaviness).

Kurtosis=n1∑(σxi−μ)4Green: In denominator, Bessels correction (use of n−1) will be done when a sample of the population is considered. Otherwise, when the whole population is used, use n.

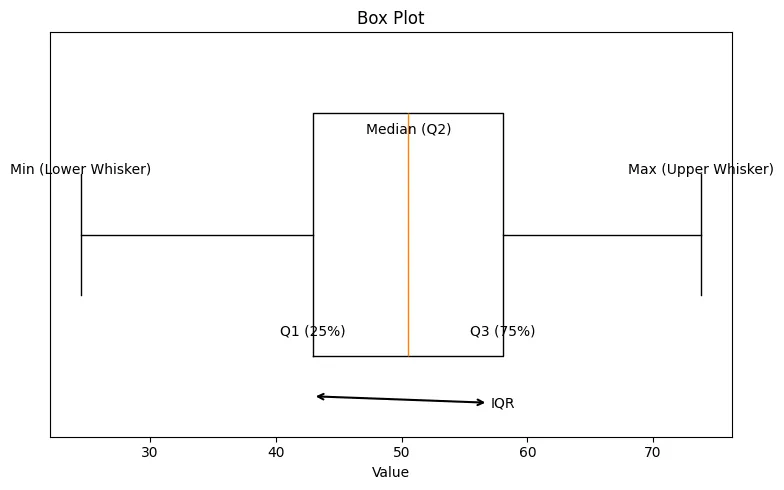

Box Plot

It is a standard way of displaying the distribution of data based on 5-number summary.

- Minimum (Q0) : lowest data point excluding any outliers.

- First Quartile (Q1 / 25th Percentile) : The value below which 25% of the data falls. The bottom of the box.

- Median (Q2 / 50th Percentile) : The middle value of the dataset. The line inside the box.

- Third Quartile (Q3 / 75th Percentile) : The value below which 75% of the data falls. The top of the box.

- Maximum (Q4) : The highest data point excluding any outliers.

Interquartile Range (IQR) : The height of the box (Q3−Q1). It represents the middle 50% of the data.

Whiskers : Lines extending from the box indicating variability outside the upper and lower quartiles.

- Set to Q1−1.5×IQR and Q3+1.5×IQR

Outliers : Individual points plotted beyond the whiskers.

Covariance and Correlation

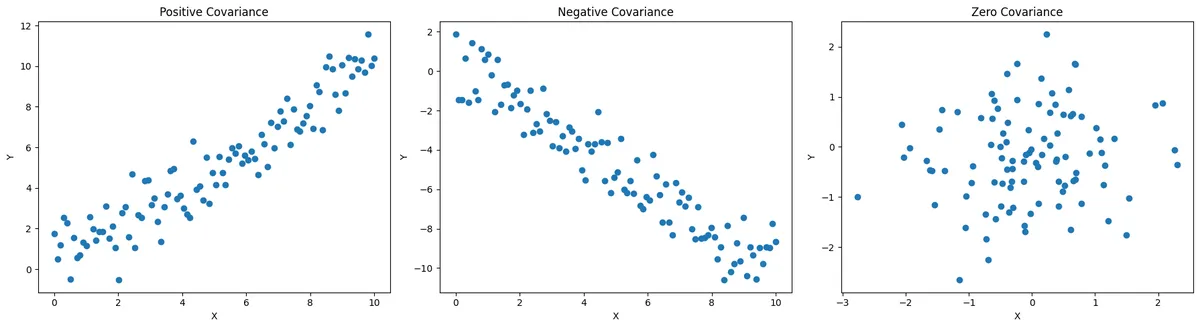

When analysing 2 features or Random Variables (X and Y), it is better to look at their joint variability.

Covariance

Cov(X,Y)=n−11∑[(X−Xˉ)(Y−Yˉ)]

=E[(X−μX)(Y−μY)]It measures the direction of the linear relationship between variables.

- Positive Covariance : As X increases, Y tends to increase.

- Negative Covariance : As X increases, Y tends to decrease.

- Zero Covariance : No linear relationship between the 2 RVs.

Correlation

It is the normalized version of covariance. It measures both the strength and direction of linear relationship.

ρX,Y=σXσYCov(X,Y)- It is the Pearson Correlation Coefficient.

- It will always be between 1 and -1.

Orange: Covariance of a RV 'X' with itself will be (E[X−E[X])(E[X−E[X]])=E[(X−E[X])2]=σX2.

Thus, Cov(X,X)=Var(X).

Covariance Matrix

For a random vector with d features, the relation between all features can be summarized using the Covariance Matrix (∑).

Σ=Var(X1)Cov(X2,X1)⋮Cov(Xd,X1)Cov(X1,X2)Var(X2)⋮Cov(Xd,X2)⋯⋯⋱⋯Cov(X1,Xd)Cov(X2,Xd)⋮Var(Xd)- Diagonal elements : Variance of individual terms.

- Off-Diagonal elements : Covariances between feature pairs.

- Cov(X,Y)=Cov(Y,X) , this means that the matrix is symmetric.

Green: If one feature is a perfect linear combination of other features, then there is redundancy in the information, and the covariance matrix is singular (i.e., its rank is less than the number of features).

Correlation Matrix

While the Covariance Matrix tells the direction of the relationship and the spread, the Correlation Matrix provides a normalized score of the relationship strength, making it easier to compare features with different units (e.g., comparing "Height in cm" vs. "Weight in kg").

R=1ρ21⋮ρd1ρ121⋮ρd2⋯⋯⋱⋯ρ1dρ2d⋮1- ρij : It is the Pearson Coefficient.

- ρij=σXiσXjCov(Xi,Xj)

- It is between [1,−1].

- It is also a symmetric matrix.

Types of Machine Learnings

- Supervised Learning

- Model learns from labelled data. For every input, the correct output is already known. The goal is for the algorithm to learn the mapping function from the input to the output.

- Eg. : Linear Regression, Logistic Regression, SVM, Decision Tree, KNN, Neural Networks, etc.

- Use cases : Email spam filtering, Medical diagnosis, Credit Scoring, etc.

- Unsupervised Learning

- The model works with unlabelled data and finds hidden patterns.

- Eg. Clustering, Dimensionality Reduction (PCA)

- Use cases : Customer segmentation, Anomaly detection, Association discovery

- Semi-Supervised Learning

- The model is trained on a small amount of labeled data and a large amount of unlabeled data.

- eg. Self-training models, Transformers

- Image classification when labelling data is expensive.

- Reinforcement Learning

- An agent learns to make decisions by performing actions in an environment to achieve a goal. It receives rewards for good actions and penalties for bad ones.

- Examples : Policy Gradient Methods

- Use cases : Robotics, Self-driving cars, Game plating (chess, go)

Supervised Learning

- Start with a labelled dataset where input (features) and outputs (labels) are known.

- Split the dataset into

train-test.

- Training Set : Used to build and tune models.

- It is split into 2 parts :

- Train split

- Validation split

- Test Set : Held out and never used during training or model selection. It is only used at the very end to estimate real-world performance.

- Using the training set, multiple candidate models are fitted based on different hyperparameters or algos.

- Validation set is used to evaluate these models during development.

- Based on the validation performance the best model is selected (highest validation accuracy, lowest loss).

- The selected model becomes the final trained model.

- It is evaluated on the test set, producing an unbiased estimate of the performance.

With this, the basics are over.