Regression Problem

It is a statistical process for estimating the relationships between a dependent variable (outcome) and one or more independent variables (features).

- Input X : Attribute variables or features (typically numerical values).

- Output Y : Response variable that is aimed to be predicted.

Cyan: Goal is to estimate a function f(X,β) such that Y≈f(X,β).

It is called linear regression because this relation is assumed to be linear with an additive error term ϵ representing statistical noise.

For a single feature vector x, the regression models is defined as :

Yi=β0+β1xi+ϵi- Yi : observed response for i-th training example

- xi : input feature for the i-th training example

- β0 : intercept (bias)

- beta1 : slope (weight)

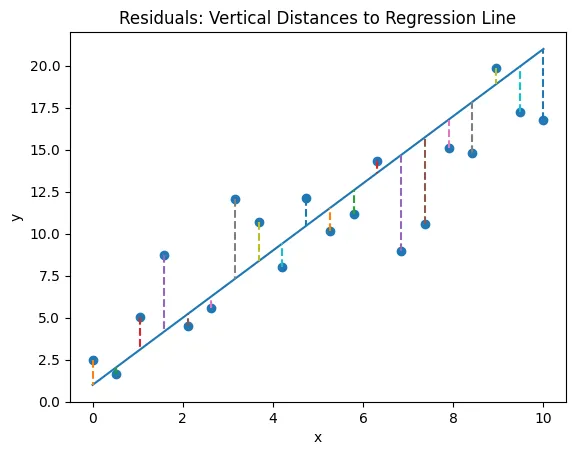

- ϵi : residual error

This represent the actual training dataset values.

The fitted values or the prediction is :

y^i=β^0+β^1xi

Ordinary Least Square (OLS)

It is the method to estimate the unknown parameters (β) by minimizing the sum of the squares of the differences between the observed dependent variables and the predicted ones by the liner function. Squared error penalizes large errors more than smaller ones.

Derivation of Sum of Squared Errors (SSE)

Residual Sum of Squares (SSE) cost function L is defined as :

L(β0,β1)=i=1∑nϵi2=i=1∑n(yi−y^i)2=i=1∑n(yi−(β0+β1xi))2Green: Goal is to : min(∑i=1n(yi−y^i)2)

To find the optimal β0 and β1, we take partial derivative w.r.t each parameter and set them to 0.

1. Derivative w.r.t β0 :

To minimize, the derivative must be equal to 0 :

∂β0∂L=−2i=1∑n(yi−β0−β1xi)=0Since β0 and β1 are constants :

i=1∑nyi−nβ0−β1i=1∑nxi=0Dividing by n :

n1i=1∑nyi−n1nβ0−n1β1i=1∑nxi=0

yˉ−β0−β1xˉ=0

β0=yˉ−β1xˉ2. Derivative w.r.t β1 :

∂β1∂L=−2i=1∑nxi(yi−β0−β1xi)=0Substitue β0 with yˉ−β1xˉ :

i=1∑nxi(yi−(yˉ−β1xˉ)−β1xi)=0

i=1∑nxi(yi−yˉ+β1xˉ−β1xi)=0

i=1∑nxi((yi−yˉ)−β1(xi−xˉ))=0

i=1∑nxi(yi−yˉ)−β1i=1∑nxi(xi−xˉ)=0Rearranging :

β^1=∑i=1nxi(xi−xˉ)∑i=1nxi(yi−yˉ)

Identity : ∑(xi−xˉ)=0 and same is true for yi,yˉ.

Thus, numerator becomes :

i=1∑nxi(yi−yˉ)=i=1∑nxi(yi−yˉ)−xˉ=0(yi−yˉ)=i=1∑n(xi−xˉ)(yi−yˉ)And thus denominator becomes :

i=1∑nxi(xi−xˉ)=i=1∑n(xi−xˉ)(xi−xˉ)=i=1∑n(xi−xˉ)2

And finally the slope β1 becomes :

β^1=∑i=1n(xi−xˉ)2∑i=1n(xi−xˉ)(yi−yˉ)And in terms of covariance and variance :

β^1=Var(X)Cov(X,Y)

Sum of Squares Decomposition and R2

To evaluate the goodness of fit, we decompose the total variability of the response variable.

- SST (Total Sum of Squares) : Measures total variance in observed Y :

SST=i=1∑n(yi−yˉ)2- SSR (Sum of Squares Regression) : Measures variance explained by the model :

SSR=i=1∑n(y^i−yˉ)2- SSE (Sum of Squares Error) : Measures unexplained variance (residuals) :

SSE=i=1∑n(yi−y^i)2These are related as :

SST=SSR+SSECoefficient of Determination (R2)

R2 represents the proportion of the variance for the dependent variable that's explained by an independent variable.

R2=SSTSSRAlso,

1=SSTSST=SSTSSR+SSE=R2+SSTSSE- The best model will have R2=1.

Correlation Coefficient (r2)

For simple linear regression, R2 is the square of Pearson Correlation Coefficient (r) :

r=σXσYCov(X,Y)⟹R2=r2

Types of Errors

Different metrics are used for different purposes :

1. Mean Squared Error (MSE) :

MSE=n1i=1∑n(yi−y^i)2- Differentiable and useful for optimization.

- Heavily penalizes large outliers (squaring term).

2. Root Mean Squared Error (RMSE) :

RMSE=MSE- Same unit as the target variable Y, making it interpretable.

3. Mean Absolute Error (MAE) :

MAE=n1i=1∑n∣yi−y^i∣- More robust to outliers than MSE, but not differentiable at 0.

Multiple Linear Regression

When multiple features are present like x1,x2,…,xn, the model becomes :

y^(i)=w0+w1x1(i)+⋯+wnxn(i)Vector-Matrix representation

Add a bias term as x0=1 for the intercept w0 into the weight vector :

- Input Matrix X : Dimensions N×(n+1)

X=11⋮1x1(1)x1(2)⋮x1(N)……⋱…xn(1)xn(2)⋮xn(N)w=[w0,w1,…,wn]T- Target Vector y : Dimensions N×1

The prediction can be written using the inner product for a single example or matrix multiplication for the whole dataset:

N×1Y^=N×(n+1)X⋅N×1w

Green: To find the coefficient w, minimize the sum of squared error (SSE)

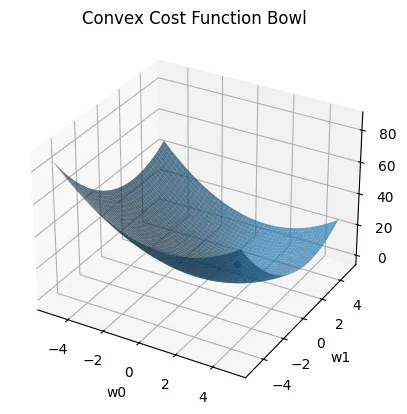

Define the cost function

Cost function (quantifies the error between a model's predicted outputs and the actual target values) :

J(w)=w∗mini=1∑n(yi−y^i)2- w∗ : optimal value of the parameter.

SSE = ∥y−y^∥2 which is equal to magnitude of the vector y−y^. Due to the fact that ∥z∥2=z12+…zp2=zT⋅z :

SSE=(y−y^)T(y−y^)And since y^=X⋅w

SSE=(y−Xw)T(y−Xw)=J(w)Thus,

J(w)=(yT−(Xw)T)(y−Xw)

J(w)=(yT−wTXT)(y−Xw)Solving this gives :

J(w)=yTy−yTXw−wTXTy+wTXTXwThe term yTXw will give a scalar value of dimension (1×1). And because transpose of a scalar is equal, yTXw=wTXTy. Thus,

J(w)=yTy−2wTXTy+wTXTXwComputing the gradient

To find the optimal w that minimizes error, we calculate the gradient of J(w) with respect to w and set it to zero.

∂w∂J(w)=∂w∂(yTy−2wTXTy+wTXTXw)∂w∂(yTy)=0

- Constant with respect to w

∂w∂(−2wTXTy)=−2XTy

- w is our variable vector (d×1).

- a=−2XTy is a constant vector (d×1) because it doesn't contain w.

- The term −2wTXTy can be rewritten as the dot product wTa.

- Rule: The derivative of a dot product with respect to one of the vectors is just the other vector.

∂w∂(wTa)=a- ∂w∂(wTXTXw)=2XTXw

- w is a vector.

- A=XTX is a square matrix.

- The expression wTAw is called a Quadratic Form.

- Rule: The derivative of a quadratic form xTAx depends on whether matrix A is symmetric.

∂x∂(xTAx)=2AxAnother rule followed :

∂w∂Xw=XTThus, the final gradient becomes :

J(w)=−2XTy+2XTXw=−2XT(y−Xw)Solving for w

Equating the gradient to 0 :

J(w)=−2XT(y−Xw)=0

XT(y−Xw)=0

w=XTXXTyThus, the closed form or the normal form equation is :

w=(XTX)−1XTyLimitations

- It requires (XTX) to be invertible, i.e., the features must not be perfectly correlated.

- For larger data, computation required to compute the inverse will be too large.

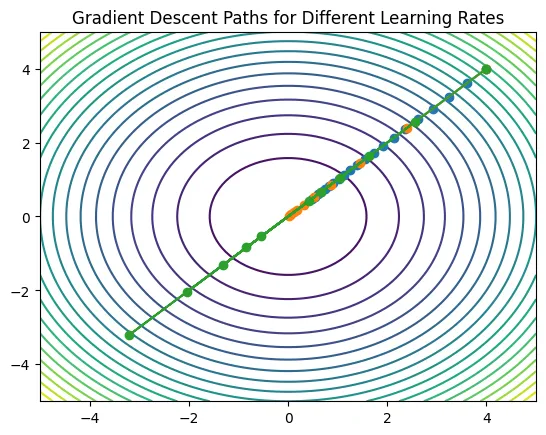

Gradient Descent

When the normal equation becomes too computationally expensive, we use Gradient Descent : an iterative optimization algorithm.

J(w)=2n1i=1∑n(y(i)−wTx(i))2- The 1/2 factor makes the derivative cleaner.

Update Rule

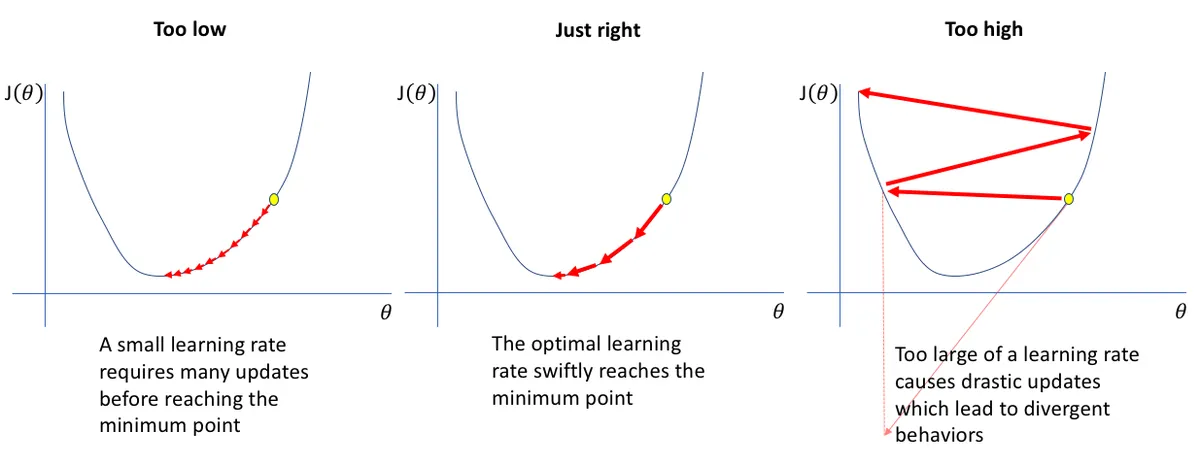

Update the weights by moving in the opposite direction of the gradient / negative gradient.

wj:=wj−α∂wj∂J(w)The gradient for a specific weight wj is :

∂wj∂J(w)=n1i=1∑n(wTx(i)−y(i))xj(i)

Types of Gradient Descent

| Type | Description | Pros | Cons |

|---|

| Batch GD | Uses all N training examples for every update. | Stable convergence. | Slow for large datasets; memory intensive |

| Stochastic GD (SGD) | Uses 1 random training example per update. | Faster iterations; escapes local minima | High variance updates; noisy convergence |

| Mini-Batch GD | Uses a small batch (b) of examples per update. | Balances stability and speed. | Hyperparameter b to tune |

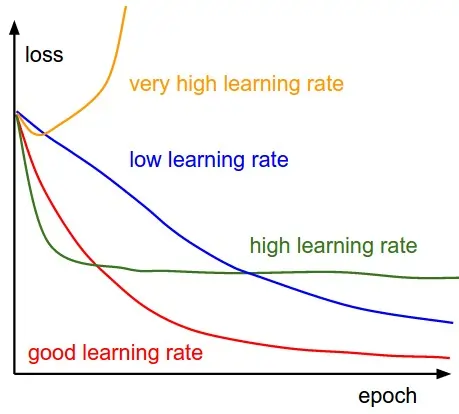

Learning Rate (α)

It is a critical hyperparameter that controls the step size taken towards a minimum of a loss function during optimization

- α too small: Convergence is guaranteed but very slow; requires many updates.

- α too large: The steps may overshoot the minimum, causing the algorithm to oscillate or diverge (cost increases).

- Optimal α: Smoothly reaches the minima.

Worked out examples of Gradient Descent

Batch Gradient Descent

It calculates gradient using sum over all points (N=2).

y^(1)=0+0(1)=0

y^(2)=0+0(2)=0

Gradients :

∂w0∂J(w)=n1i=1∑n(wTx(i)−y(i))- Because xj(i)=1 for j=0. Thus, :

∂w0∂J=21i=1∑2(Error(i))⋅x0(i)=21(−2(1)−4(1))=−3

∂w1∂J(w)=n1i=1∑n(wTx(i)−y(i))x1(i)

∂w1∂J=21∑(Error×x)=21(−2(1)−4(2))=−5Update :

w0:=0−0.1(−3)=0.3

w1:=0−0.1(−5)=0.5Thus, the model after 1 epoch (1 complete pass through the dataset) is

y=0.3+0.5x

Stochastic Gradient Descent (SGD)

It updates after each example.

wj:=wj−αGradient for single example(y^(i)−y(i))xj(i)Iteration 1 :

Pred : y^(1)=w0(1)+w1(x(1))=0(1)+0(1)=0

Error=(y^(1)−y(1))=0−2=−2

Gradient for w0 as x0=1.

∂w0∂J=Error×1=−2- Gradient for w1 as x1=1.

∂w1∂J=Error×x(1)=−2×1=−2w0:=0−0.1(−2)=0.2

w1:=0−0.1(−2)=0.2Thus, the current model is : y=0.2+0.2x

Iteration 2

Use the updated weights from Iteration 1.

- Prediction : y^(2)=0.2(1)+0.2(2)=0.2+0.4=0.6

- Error=(y^(2)−y(2))=0.6−4=−3.4

- Compute gradients

∂w0∂J=−3.4×1=−3.4

∂w1∂J=−3.4×2=−6.8w0:=0.2−0.1(−3.4)=0.2+0.34=0.54

w1:=0.2−0.1(−6.8)=0.2+0.68=0.88Thus, the final model after 1 epoch is :

y=0.54+0.88x

With this post on Linear Regression, normal form equation, gradient descent, types of errors, OLS is over.