Classification Problem

In supervised learning, when the target variable y is discrete or categorical, the task is called Classification. It asks which category? unlike regression which asks how much?.

Given a dataset D={(x(i),y(i))}i=1m, where x∈Rn are the features, we seek a function f:Rn→{C1,C2,...,Ck}.

In Binary Classification, y∈{0,1} where :

- 0 : Negative class (e.g., Benign tumor, Non-spam).

- 1 : Positive class (e.g., Malignant tumor, Spam).

Logistic Regression is a Generalized Linear Model (GLM)

Logistic Regression is a regression model for probabilities. It predicts a continues probability value y^∈[0,1], which is descretized using a threshold (0.5) to perform classification.

Thus, it is a regression model adapted for classification tasks.

Deriving Logistic Regression from Linear Regression

Linear model can be adapted for binary classification by mapping the output of the linear equation z=wTx+b to the interval (0,1). This can be achieved by using the Odds Ratio and the Logit function.

1. Odds Ratio

The odds of an event occuring is the ratio of the probability of success P to the probability of failure $1-P $.

Odds=1−P(y=1∣x)P(y=1∣x)- The range of the odds is [0,∞).

2. Logit (Log-Odds)

The output range of linear regression model is (−∞,∞). To map the range of the Odds ([0,∞)) to this, take the natural log of the Odds :

logit(P)=ln(1−PP)=wTx+bThis linear relation implies that the log-odds are linear w.r.t the input features x.

3. Sigmoid Function

Isolate P in the above equation as it is what we need to find out. For this, the logit function is inverted by raising both side to power of e:

1−PP=ewTx+b

P=ewTx+b(1−P)

P=ewTx+b−ewTx+bP

P(1+ewTx+b)=ewTx+b

P=1+ewTx+bewTx+bLet z=ewTx+b. Then dividing the numerator and denominator by ewTx+b :

P=P(y=1∣x)=1+e−z1=σ(z)σ(z) is called the Sigmoid Function.

![Sigmoid Mapping inputs to [0,1]](https://kush-singh-26.github.io/blogs/static/images/Ml-9.webp)

Extending Binary Models to Multi-Class

As the name suggests, binary model is good for only 2 class problems. For more more than 2 classes a different approach is required.

One vs All Approach

For K classes, we train K separate binary logistic regression classifiers. For each class i∈{1,…,K} , a model is trained to predict the probability that a data point belongs to that class i versus all other classes.

- Treat class i as the "Positive" (y=1) class.

- Treat all other classes (j=i) as the "Negative" (y=0) class.

For prediction, we run all K classifiers and choose the class that maximizes the probability.

Softmax Regression / Multinomial Logistic Regression

It models the joint probability of all classes simultaneously. Instead of a single weight vector w, we now have a weight matrix W of shape [K×n] (where n is the number of features). Each class k has its own distinct weight vector wk.

For a given input x we compute a score or logit zk for each class k

zk=wkTx+bkOr in vector notation :

z=Wx+bSoftmax Function

The sigmoid function maps a single value to [0,1]. The Softmax function generalizes this by mapping a vector of K arbitrary real values (logits) to a probability distribution of K values.The probability that input x belongs to class k is given by:

P(y=k∣x)=softmax(z)k=∑j=1Kezjezk- It is like a max function but differentiable, hence soft.

- Sum of probabilities is 1.

- Each output value is in interval (0,1).

Loss Function : Maximum Likelihood Estimation (MLE)

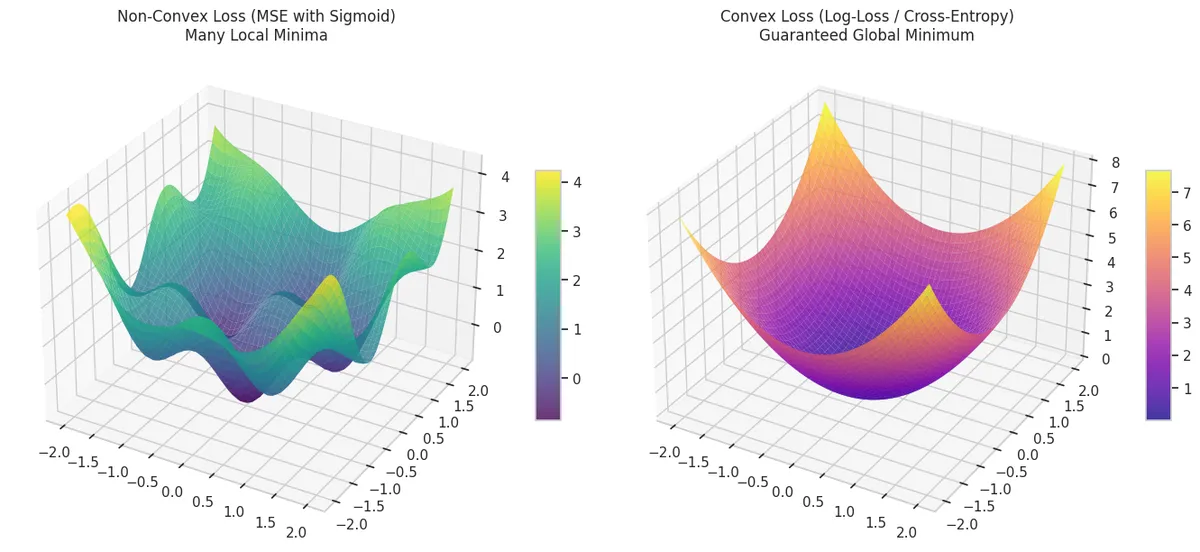

Why not use MSE?

MSE is used in Linear Regression because it creates a bowl-shaped convex curve. No matter where we start on the curve, via Gradient Descent we will eventually reach the absolute bottom (Global Minimum).

However, applying MSE in Logistic Regression which uses a sigmoid function (non-linear function) will result in a non-convex, wavy and complex graph.

Maroon: Convex Functions : If a line segment between any 2 points of the function does not lie below the graph.

The non-convex curve has many valleys (local minima). If the algorithm starts in the wrong spot, it might get stuck in a shallow valley and think it has found the best solution when it hasn't. Hence, MSE is not used for Logistic Regression.

Binary Cross-Entropy (BCE) Loss

Due to failure of MSE, BCE is derived using Maximum Likelihood Estimation (MLE).

Instead of measuring the distance between the prediction and the target (like MSE), MLE asks a statistical question: What parameters (w) would maximize the probability of observing the data we actually have?

Assumption : The target y follows a Bernoulli Distribution, because the output can only be 0 or 1.

P(y∣x)={y^1−y^if y=1if y=0- If the actual class is 1, we want the model to predict a high probability (y^).

- If the actual class is 0, we want the model to predict a low probability (which means $1-\hat{y}$ is high).

This can be compacted to :

P(y∣x)=y^y(1−y^)(1−y)Assuming m independent training examples, the Likelihood L(w) of the parameter w is the product of the probabilities of the observed data :

L(w)=i=1∏m(y^(i))y(i)(1−y^(i))(1−y(i))To simplify differentiation and avoid numerical overflow, we maximize the Log-Likelihood l(w). This makes the Product (Π) to Summation (∑).

Taking natural log (ln) :

l(w)=lnL(w)=i=1∑m[y(i)ln(y^(i))+(1−y(i))ln(1−y^(i))]But optimization algos are designed to minimize the error, not maximizze the likelihood. Thus, this equation is inverted by adding a negative sign.

Thus, the final Binary Cross-Entropy Loss or the Negative Log-Loss function is :

lnL(w)=−i=1∑m[y(i)ln(y^(i))+(1−y(i))ln(1−y^(i))]Thus, the average BCE loss is :

J(w)=LBCE=−m1i=1∑m[y(i)ln(σ(wTx(i)+b))+(1−y(i))ln(1−σ(wTx(i)+b))]- This is a convex function and thus gradient descent will converge to the global minima.

Gradient Descent Derivation

Green: Goal : Minimize J(w). For this, the gradient ∂wj∂J is needed.

1. Derivative of Sigmoid Function

dzdσ(z)=σ(z)(1−σ(z))2. Derivative of the loss (Chain Rule)

Using only a single example :

L=−[yln(y^)+(1−y)ln(1−y^)],y^=σ(z)Using the chain rule :

∂wj∂L=∂y^∂L⋅∂z∂y^⋅∂wj∂z- Partial of Loss w.r.t Prediction :

∂y^∂L=−(y^y−1−y^1−y)=y^(1−y^)y^−y- Partial of Prediction w.r.t Logit : y^=σ(z)=1+e−z1

∂z∂y^=y^(1−y^)- Partial of Logit w.r.t Weight : z=∑wjxj+b

∂wj∂z=xj3. Combining Terms

∂wj∂L=y^(1−y^)y^−y⋅y^(1−y^)⋅xjThe term y^(1−y^) cancels out, leaving :

∂wj∂L=(y^−y)xj

Batch Gradient Descent

Update weights using the average gradient over the entire dataset m:

wj:=wj−αm1i=1∑m(y^(i)−y(i))xj(i)Stochastic Gradient Descent

Update the weights for each training example (x(i),y(i)) individually:

wj:=wj−α(y^(i)−y(i))xj(i)Pink: This rule is identical in form to the Linear Regression update rule but the definition of y^ has changed from linear (wTx) to sigmoid (σ(wTx)).

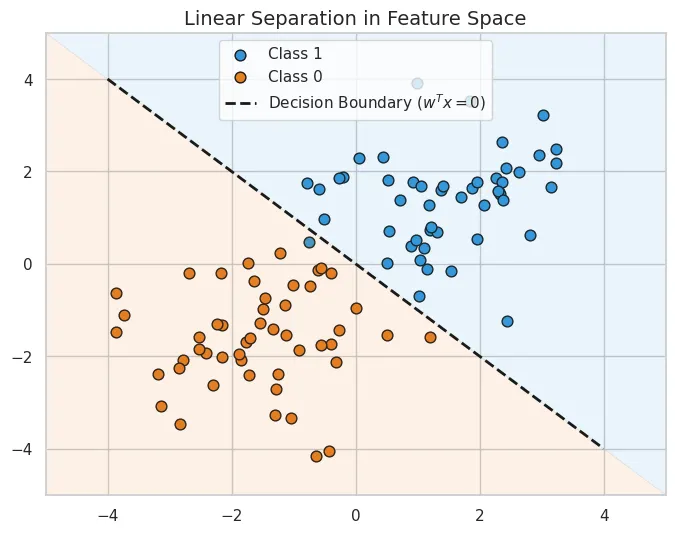

Binary Logistic Regression is a linear classifier.

To prove that a classifier is linear, we must show that the decision boundary separating the classes is a linear function of the input features x (i.e., a line, plane, or hyperplane).

In logistic regression, the probability prediction is given by the sigmoid function applied to a linear combination of inputs.

P(y=1∣x)=σ(z)=frac11+e−zz (logits) is a linear function of the weights and features :

z=wTx+b=w1x1+w2x2+⋯+wnxn+bAn instance is classified into the positive class (y=1) if the predicted probability is greater than or equal to a threshold, typically $0.5$.

Predict y=1⟺P(y=1∣x)≥0.5

1+e−z1≥0.51≥0.5(1+e−z)2≥1+e−z1≥e−zTaking the natural log (ln) of both sides

ln(1)≥−z0≥−z⟹z≥0wTx+b≥0The decision boundary is the exact point where the classifier is uncertain (probability =0.5), which corresponds to the equation:

wTx+b=0- This represents a hyperplane (line in 2D, plane in 3D).

Since the boundary that separates the two classes in the feature space is linear, Logistic Regression is, by definition, a linear classifier.

- While the decision boundary is linear, the relationship between the input features and the predicted probability is non-linear (S-shaped or sigmoidal).

- The "linear" label strictly refers to the shape of the separation boundary in space, not the probability curve.

With this, the post on binary classifiers, logistic regression, Binary Cross Entropy Loss / negative log-loss comes to an end.